RealClimate: The Scafetta Saga

9 min read

It has taken 17 months to get a comment published pointing out the obvious errors in the Scafetta (2022) paper in GRL.

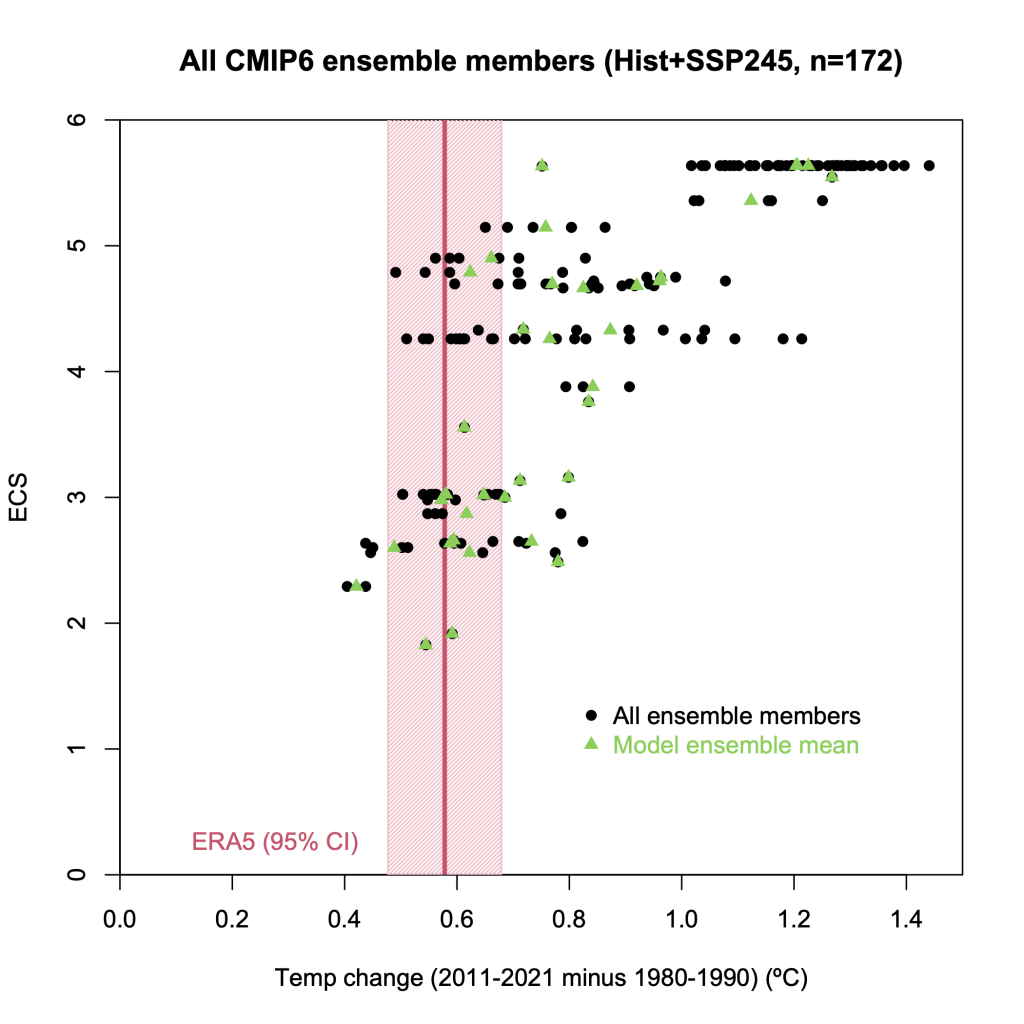

Back in March 2022, Nicola Scafetta published a short paper in Geophysical Research Letters (GRL) purporting to show through ‘advanced’ means that ‘all models with ECS > 3.0°C overestimate the observed global surface warming’ (as defined by ERA5). We (me, Gareth Jones and John Kennedy) wrote a note up within a couple of days pointing out how wrongheaded the reasoning was and how the results did not stand up to scrutiny.

At the time, GRL had a policy not to accept comments for publication. Instead, they had a somewhat opaque and, I assume, rarely used, process by which you could submit a complaint about a paper and upon review, the editors would decide whether a correction, amendment, or even retraction, was warranted. We therefore submitted our note as a complaint that same week.

For whatever reason (and speculation may abound), the original process hit the buffers after a few months, which possibly contributed to a reassessment in December 2022 by the GRL editors and AGU of their policy regarding comments. Henceforth, they would be accepted! [Note this is something many people had been wanting for some time]. After some back and forth on how exactly this would work (including updating the GRL website to accept comments), we reformatted our note as a comment, and submitted it formally on December 12, 2022. We were assured from the editor-in-chief and publications manager that this would be a ‘streamlined’ and ‘timely’ review process.

With respect to our comment, that appeared to be the case: It was reviewed, received minor comments, was resubmitted, and accepted on January 28, 2023.

But there it sat for 7 months!

The issue was that the GRL editors wanted to have both the comment and a reply appear together. However, the reply had to pass peer review as well, and that seems to have been a bit of a bottleneck. But while the reply wasn’t being accepted, our comment sat in limbo. Indeed, the situation inadvertently gives the criticized author(s) an effective delaying tactic since, as long as a reply is promised but not delivered, the comment doesn’t see the light of day. After multiple successive reassurances that it would just take a few weeks longer, Scafetta’s reply was finally accepted (through exhaustion?) and we were finally moved into production on August 18, 2023. The comment (direct link) and reply (direct link) appeared online on September 21, 2023.

All in all, it took 17 months, two separate processes, and dozens of emails, who knows how much internal deliberation, for an official comment to get into the journal pointing issues that were obvious immediately the paper came out.

Why bother?

This is a perennial question. Why do we need to correct the scientific record in formal ways when we have abundant blogs, PubPeer, and social media, to get the message out? Clearly, many people who come across a technical paper in the literature won’t instantly be aware of criticisms of it on Twitter, or someone’s blog. Not everyone has installed the PubPeer browser extension for getting notified when a paper you are looking at or citing has comments [though you really should!]. And so, since journals remain extremely reluctant to point to third party commentary on their published papers, going through the journals’ own process seems like it’s the only way to get a comment or criticism noticed by the people who are reading the original article. Without that, people may read and cite the material without being aware of the criticisms (having said that, the original Scafetta paper has so far amassed only 4 citations, half of which are from Scafetta himself (not counting this comment and reply). For comparison, our Hausfather et al (2022) commentary on ‘hot models’ in CMIP6 which came out in May 2022 has been cited 118 times).

The odd thing about how long this has taken is that the substance of the comment was produced extremely quickly (a few days) because the errors in the original paper were both commonplace and easily demonstrated. The time, instead, has been entirely taken up by the process itself. It shouldn’t be beyond the wit of people to reduce that burden considerably. [Perhaps, let us know in the comments if more recent experiences with GRL have improved?].

I’ve previously discussed other ideas, such as short-form journals that could be devoted to post-publication peer reviews and extensions, but this has not (yet!) gained much traction, though AGU has suggested that the online Earth and Space Sciences could be used as such a platform.

What should have happened?

Every claim in the Scafetta paper was wrong and wrongly reasoned. As soon as the journal was notified of that, and had the original process reviewed by independent editors and reviewers, it should have been clear that it would never have passed competent peer review. At that point, the author could have been given a chance to amend the paper to pass a new review, and if they were unwilling or unable to do so, the paper should have been retracted. The COPE guidelines are clear that retraction is warranted in the case of unreliable results resulting from major errors. It does no-one any good to have incorrectly argued claims, inappropriate analyses, and unsupported claims in the literature. It would not have appeared in GRL if it had been competently reviewed, and so why should it remain, now that it has been?

Of course, people sometimes get a bit bent out of shape when their papers are retracted or even if that is threatened, and some authors have gone as far as instigating legal action for defamation against the journals for pursuing it or the newspapers reporting it. I am, however, unaware of any such suit succeeding. Authors do not have any right to be published in their journal of choice, and the judgement of a journal in deciding the what does or does not get published in their pages is (and should be) pretty much absolute.

So was the reply worth waiting 7 months for?

Nope. Not in the slightest.

He spends most of the response arguing incorrectly about the accuracy of the ERA5 surface temperatures – something that isn’t even in question, they could be perfect and wouldn’t impact the point we were making. His confusion is that he thinks that the specific realization of the internal variability that the real world followed is the same as the forced component of the temperature trends that we would hope to capture with climate models. It is not. We discussed this in some detail in a subsequent post when he first made this error in his 2023 Climate Dynamics paper. To be specific, the observed temperature record can be thought of as consisting of a climatological trend, internal variability with a mean of zero, plus structural uncertainty related to how well the observational estimate matches the real world:

with ![]() assumed to be constant by definition over each decade, and so

assumed to be constant by definition over each decade, and so

![]()

The ![]() can be estimated from the decadal sample and for GISTEMP or ERA5 it’s around 0.05ºC, while

can be estimated from the decadal sample and for GISTEMP or ERA5 it’s around 0.05ºC, while ![]() is much smaller (0.016ºC or so). So the 95% confidence interval on the decadal change due to internal variability is therefore around

is much smaller (0.016ºC or so). So the 95% confidence interval on the decadal change due to internal variability is therefore around ![]() ºC. With models you can actually run an ensemble and estimate this more directly, and for consistency, the two methods should be comparable. Curiously though, the 95% ensemble spread for the models (with 3 or more simulations) has quite a wide range from 0.05ºC to a whopping 0.42ºC (EC-Earth, a definite outlier), though the model mean is a more reasonable 0.17ºC.

ºC. With models you can actually run an ensemble and estimate this more directly, and for consistency, the two methods should be comparable. Curiously though, the 95% ensemble spread for the models (with 3 or more simulations) has quite a wide range from 0.05ºC to a whopping 0.42ºC (EC-Earth, a definite outlier), though the model mean is a more reasonable 0.17ºC.

Curiously Scafetta associates the structural uncertainty in annual temperature anomalies in the ERA5 reanalysis with the uncertainty in the in situ surface temperature analyses (like GISTEMP or HadCRUT5) – products that use a totally different methodology whose error characteristics aren’t obviously related at all. In any case, it’s a very small number and the uncertainty in our estimate of the climatological trend is totally dominated by the variance due to the specific realization of the weather. Also curious is his insistence that the calculation of an internal variability component can’t be fundamental because he gets a different number using the monthly variations as opposed to the annual ones. He seems unaware of the influence of auto-correlation.

Also amusing is his excuse for not looking at the full ensemble in assessing the consistency of specific models. He claims to have taken three runs from each model. But he’s being very disingenuous here. His ‘three runs’ were the ensemble means from three scenarios (the different SSPs from 2015 to 2020), which a) barely differ from each other in forcing because of the minor differences in GHG concentrations over a mere five years and, b) are the ensemble means (at least for the runs with multiple ensemble members)! It is possible that he isn’t aware of what he actually did since it is not stated clearly either in the original paper, nor this reply, but is obvious from comparing his results from the SPP2-45 scenarios with our Figure 1. For instance, for NCAR CESM2, there are six simulations with deltas of [0.788, 0.861, 0.735, 0.653, 0.682, 0.795] ºC (using the period definition in the original paper) and an ensemble mean change of 0.752ºC. Scafetta’s value for this model is … 0.75ºC. Similarly, for NorESM2-LM, the individual runs have changes of 0.772, 0.632, & 0.444ºC, with an ensemble mean of 0.616ºC. Scafetta’s number? You guessed it, 0.62ºC. It is simply not possible to estimate the ensemble spread from only using the ensemble means. Another oddity of this methodology is that the spread for the models with many ensemble members is much smaller than the spread for models with only a single simulation since for these models you actually do sample some of the internal variability with the three scenarios. For instance, CanESM5 (50 ensemble members) has a spread of 0.03ºC across the three scenarios, and and IPSL-CM6A-L (11 ensemble members) has no spread at all! Meanwhile MCM-UA-1-0, and HadGEM3-GC31-LL (with only single runs) have spreads in Scafetta’s table of 0.11ºC, and 0.17ºC respectively. [All that effort put in to running initial condition ensembles for nought!]

Thus the two points that we made in our comment – that he misunderstood the uncertainty in the climatological trends in the observations and that he didn’t utilize the spread in the model ensembles, and that this fatally compromises his conclusions, stand even more clearly now. The additional spin he now wants to put on his results, defining a new concept called apparently, a ‘macro-GCM’, has the internal consistency of whipped cream. None of it rescues his patently incorrect conclusions.

Summary

I have absolutely no expectation that this episode will encourage Scafetta to improve his analyses. He’s been doing this kind of thing for almost two decades now. He is too wedded to the conclusions that he wants to let little things like the actual results or consistency intrude. I am slightly more confident that processes at GRL may improve, and the recent change to allow comments is very welcome. Hopefully, this exchange might be helpful for other researchers thinking about the appropriate way to compare models to observations (for instance, it made an appearance in Jain et al, 2023).

The last paragraph in our comment sums it up:

In critiquing the tests in this particular paper, we are not suggesting that hindcast comparisons should not be performed, nor are we claiming that all models in the CMIP6 archive perform equally well. […] However, the claims in Scafetta (2022) are simply not supported by an appropriate analysis and should be withdrawn or amended.

Schmidt et al, 2023

Let us all try to do better in future.

References

N. Scafetta, “Advanced Testing of Low, Medium, and High ECS CMIP6 GCM Simulations Versus ERA5‐T2m”, Geophysical Research Letters, vol. 49, 2022. http://dx.doi.org/10.1029/2022GL097716

G.A. Schmidt, G.S. Jones, and J.J. Kennedy, “Comment on “Advanced Testing of Low, Medium, and High ECS CMIP6 GCM Simulations Versus ERA5‐T2m” by N. Scafetta (2022)”, Geophysical Research Letters, vol. 50, 2023. http://dx.doi.org/10.1029/2022GL102530

N. Scafetta, “Reply to “Comment on ‘Advanced Testing of Low, Medium, and High ECS CMIP6 GCM Simulations Versus ERA5‐T2m’ by N. Scafetta (2022)” by Schmidt et al. (2023)”, Geophysical Research Letters, vol. 50, 2023. http://dx.doi.org/10.1029/2023GL104960

Z. Hausfather, K. Marvel, G.A. Schmidt, J.W. Nielsen-Gammon, and M. Zelinka, “Climate simulations: recognize the ‘hot model’ problem”, Nature, vol. 605, pp. 26-29, 2022. http://dx.doi.org/10.1038/d41586-022-01192-2

S. Jain, A.A. Scaife, T.G. Shepherd, C. Deser, N. Dunstone, G.A. Schmidt, K.E. Trenberth, and T. Turkington, “Importance of internal variability for climate model assessment”, npj Climate and Atmospheric Science, vol. 6, 2023. http://dx.doi.org/10.1038/s41612-023-00389-0